How to Teach Artificial Intelligence: A Complete Guide

Teaching artificial intelligence isn't just about Python libraries and algorithms. To do it right, you need a solid educational framework built on critical thinking, ethical awareness, and hands-on skills. It’s a shift from technical jargon to genuine AI literacy for all learners. This prepares them not just for a job in tech, but as thoughtful citizens in a world increasingly shaped by AI.

Building Your Foundation for Teaching AI

Before anyone writes a single line of code, the real first step is laying a strong foundation. This means weaving AI principles into subjects far beyond computer science. Think about how AI intersects with history, influences modern art, or raises questions in social studies. That’s how you create a holistic learning experience.

This interdisciplinary approach is non-negotiable. When students see AI not just as code but as a force actively shaping society, they start asking deeper, more important questions. That’s the mindset they need to critically engage with the technology they’ll be using—and creating—for the rest of their lives.

Fostering Genuine AI Literacy

The ultimate goal here is to cultivate true AI literacy. This isn't just about knowing how to prompt a chatbot. It's the ability to understand, evaluate, and thoughtfully apply AI in different situations. It means getting a real feel for the data that fuels these systems, learning to spot potential biases, and grappling with the ethical consequences of deploying them.

The need for this is more urgent than ever. Students are already all-in on this technology. A recent study found that by 2025, an incredible 92% of students globally were using AI tools for their schoolwork, a huge jump from just 66% the year before. This explosion in use means we have to provide structured education to guide them. You can dig into the specifics in this study on student AI usage.

A successful AI education program doesn't just produce coders; it cultivates responsible innovators. The focus must be on building a framework that champions both technical competence and a strong ethical compass from the very beginning.

Integrating Expert Voices

One of the most powerful ways to build this foundation is to bring the real world into your classroom. Connecting students with industry practitioners through a speaker roster provides context that no textbook can match.

Imagine your students hearing directly from a leading AI ethicist like Dr. Rumman Chowdhury, who can break down the real-world impact of algorithmic bias from her experience building responsible AI systems at major organizations. Or think about the lightbulb moments when a machine learning pioneer like Cassie Kozyrkov, Google's first Chief Decision Scientist, makes complex concepts feel simple and intuitive.

These voices do more than just explain technology. They demystify the field, reveal diverse career paths, and inspire students to see a place for themselves in the future of AI. That kind of direct exposure is an absolutely invaluable part of the learning journey.

Designing Your Future-Ready AI Curriculum

A truly great AI curriculum isn't just a list of topics; it's a carefully crafted journey. It needs to guide learners from the basic building blocks to complex, real-world applications. A modular approach works best, letting you stack knowledge logically while staying nimble enough to adapt as the technology barrels forward.

Your first modules should be all about demystifying the tech. Start by drawing a clear line in the sand: this is what AI is, and this is what it isn't. It's the perfect time to unpack foundational concepts like machine learning, neural networks, and the critical role of data. Use plenty of relatable analogies to make these abstract ideas click.

Once that foundation is set, you can start branching out into more specialized areas. Each module should tackle a critical piece of AI literacy, turning abstract theory into something tangible and useful.

Core Curriculum Modules

To build a program that really sticks, structure your curriculum around these key pillars:

- Data Literacy and Algorithmic Thinking: This one is non-negotiable. Learners must understand that data is the fuel for any AI system and how algorithms use that fuel to find patterns and make predictions. A big part of this involves designing an engaging artificial intelligence lesson plan that makes these concepts feel real.

- Practical AI Applications: Don't just tell them, show them. Dig into how AI is already changing industries, from predictive marketing campaigns to life-saving diagnostic tools in healthcare. This real-world context is what grabs people and keeps them invested.

- Societal and Ethical Impacts: This is where you move beyond the how and start asking why and should we. Talk about real cases of algorithmic bias, the thorny issues around data privacy, and how AI is reshaping the job market. The critical thinking skills developed here are just as important as the technical ones.

This is also a fantastic opportunity to bring in an expert voice to light a fire. Imagine having a speaker like Nina Schick, a leading advisor on Generative AI, unpack the societal shifts this tech is driving. Or picture Dr. Ayesha Khanna explaining how future cities will be built around intelligent, interconnected systems. These perspectives add a layer of relevance that a standard lecture just can't match.

From Theory to Tangible Skills

The real test of any AI curriculum is whether it empowers people to build something. This is where project-based learning becomes your best friend, acting as the bridge between knowing the concepts and having the skills.

These projects don't need to be overwhelmingly complex. The goal is to illustrate core principles through hands-on creation.

Try challenging your students with projects like these:

- Build a Basic Movie Recommendation Engine: Using a simple dataset, they can code a program that suggests movies based on user ratings. It’s a perfect way to see personalization algorithms in action.

- Develop a News Article Classifier: This project involves training a simple model to sort articles into categories like "sports" or "tech," teaching the fundamentals of text classification.

- Create a Simple Chatbot: With accessible tools, students can design a chatbot for a specific task, like answering FAQs for a school club. They'll get a firsthand look at conversational AI logic.

By grounding the curriculum in project-based work, you transform passive learners into active creators. The goal is not just to teach artificial intelligence, but to cultivate a mindset of inquiry, problem-solving, and responsible innovation.

This hands-on approach is what cements understanding and builds real confidence. It also mirrors the iterative, problem-solving vibe of actual AI development—a crucial lesson for anyone hoping to innovate in this space. Building out these skills is a core part of any successful corporate learning and development strategy aimed at preparing a workforce for what's next.

Building AI Confidence for Teachers and Students

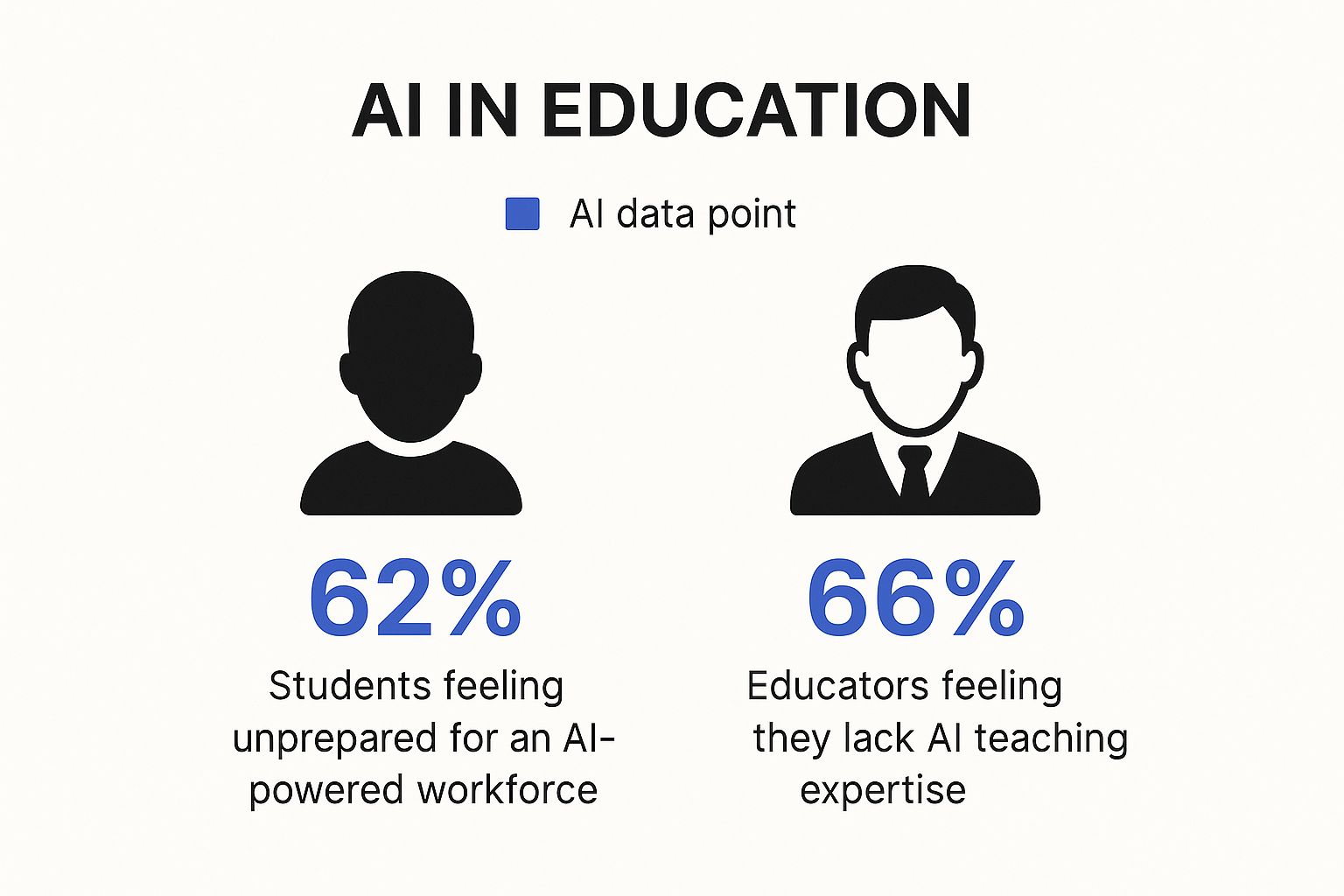

Let's be honest: one of the biggest roadblocks to teaching AI has nothing to do with technology. It's about psychology. There's a nagging feeling of being unprepared that hits both educators and students, creating a confidence gap that stops exploration right when it's needed most.

Even as AI tools become a part of our daily lives, there's a huge disconnect between using them and truly understanding them. A 2025 Digital Education Council survey found that 58% of students feel they don't know enough about AI, and 48% feel unready for a job market that demands these skills. Teachers are in a similar boat, with 40% admitting they're just beginners in AI literacy. You can dig into the numbers yourself in the full summary of AI in higher education surveys.

This feeling of being behind the curve is a shared experience, as the data below shows.

The numbers make it clear: a huge chunk of both learners and their teachers feel like they're playing catch-up. This means that building confidence has to be a core part of any AI curriculum.

Empowering Educators Through Smart Professional Development

Here's the secret: you don't have to be a machine learning engineer to teach AI well. The best educators are facilitators—guides who are willing to learn right alongside their students. It's all about building a solid foundation and staying curious.

Professional development is key to getting there. But instead of chasing some impossible level of expertise, the goal should be practical, continuous learning.

- Find targeted online workshops. Look for programs designed specifically for educators. They often come with ready-made lesson plans and project ideas you can use immediately.

- Join a community. Online forums or social media groups for teachers tackling AI are invaluable. They’re a place to ask questions, celebrate small wins, and find your people.

- Get insights from the experts. Bringing in an outside speaker can be a game-changer. An expert like Cassie Kozyrkov, who has a real talent for making complex AI concepts feel simple, can demystify the topic for an entire faculty in a single session.

This approach shifts your role from being the "expert" to being the person who empowers students to ask the right questions and find the answers together.

Nurturing a Growth Mindset in the Classroom

For students, it’s all about fostering a growth mindset. We need to reframe AI from this scary, monolithic subject into a set of tools they can play with, question, and ultimately learn to control.

Create a classroom where it's safe to experiment, even if the results are messy. Frame mistakes as learning opportunities—after all, that’s exactly how machine learning models improve.

The goal isn't to create infallible experts overnight. It's to empower both educators and students to become confident, critical thinkers who are comfortable with the iterative and often messy process of learning and building with AI.

A great way to do this is by setting up low-stakes "AI sandboxes." Let students play with different tools without the pressure of a grade. This builds curiosity and resilience, which are far more valuable than memorizing a few technical terms.

Learning Alongside Your Students

The most freeing thing an educator can realize is that you don’t need all the answers. In a field moving as fast as AI, nobody does. When you embrace the role of a co-learner, it can completely transform your classroom for the better.

When a student stumps you with a question, turn it into a group mission. "I don't know, let's find out together." This models a critical real-world skill: how to find reliable information and learn on your own.

This shared journey builds incredible trust. It shows students that learning is a lifelong process, not a destination. By leaning into this role, you not only build your own confidence but also empower your students to become adaptable, resourceful people ready for whatever comes next.

Choosing the Right Tools for Hands-On AI Projects

Let's be honest: abstract AI concepts can feel a bit... floaty. It's not until a student actually gets their hands dirty and builds something that the theory really clicks. Hands-on projects are where passive knowledge gets converted into active skill.

The right tool is the bridge between a theoretical lesson and that genuine "aha!" moment.

Picking one can feel like a huge task, but it really just boils down to matching the tool's complexity to your students' current skill level and your end goal. The trick is to start with platforms that have a low barrier to entry. This lets learners score a quick win, build some momentum, and actually get excited about what's next.

Starting with No-Code and Low-Code Platforms

You don't need to be a Python wizard to teach the core ideas of machine learning. In fact, for most learners, starting without code is the best way to grasp the big ideas without getting tripped up by syntax.

User-friendly platforms offer a visual, intuitive path to understanding how models are trained and tested.

- Google's Teachable Machine: This is the perfect place to start. Students can train a simple model in minutes using just their webcam or microphone. They'll learn about data collection, training, and output in a way that’s fun and instantly gratifying.

- Machine Learning for Kids: This platform cleverly uses the block-based coding language Scratch to introduce machine learning concepts. It’s incredibly accessible, even for younger students or total beginners.

- DataRobot: For more advanced learners who are ready for a low-code environment, this platform automates a lot of the model-building grunt work. It frees them up to focus on the business problem and how to interpret the data.

These tools are fantastic for demystifying AI. They show that at its core, machine learning is about teaching a computer to recognize patterns—a concept anyone can understand through direct interaction. You can also explore other helpful resources like these 12 AI tools specifically for teachers to find the right fit for your classroom.

When it comes to selecting a platform, it helps to see them side-by-side. Each tool is designed with a specific user and outcome in mind.

Comparison of AI Educational Tools

| Tool/Platform | Best For (Skill Level) | Key Features | Primary Learning Outcome |

|---|---|---|---|

| Teachable Machine | Beginner | Web-based, no-code interface; webcam/mic input; instant feedback | Grasping the basics of data collection, model training, and prediction in a tangible way. |

| Machine Learning for Kids | Beginner to Intermediate | Scratch-based block coding; integration with physical devices; guided projects | Understanding how ML models can be integrated into simple applications and games. |

| DataRobot | Intermediate to Advanced | Automated machine learning (AutoML); low-code environment; focus on business use cases | Learning to apply ML to real-world problems and interpret model performance metrics. |

| Scikit-learn | Intermediate to Advanced | Python library; comprehensive documentation; clean, consistent API | Building foundational machine learning skills in a professional coding environment. |

This comparison highlights the natural progression from visual, no-code tools that build conceptual understanding to powerful libraries used in professional settings.

Progressing to Code-Based Libraries

Once students have a solid conceptual handle on things, they're ready to graduate to the tools that power professional AI development. This is where they learn to build more customized and powerful solutions from the ground up.

The transition to code should feel like an upgrade—a way to gain more control and flexibility. While no-code tools are brilliant for learning concepts, coding libraries are what the pros use to build real-world applications.

- Scikit-learn: This is almost always the best first library for aspiring data scientists. It has a clean, consistent interface and amazing documentation, making it great for foundational tasks like classification, regression, and clustering.

- TensorFlow and PyTorch: These are the heavy hitters for deep learning. They're more complex, but they unlock the ability to build sophisticated neural networks for things like image recognition and natural language processing.

The most effective learning path isn't about finding a single, perfect tool. It's about creating a progression. Start with intuitive, visual platforms to build confidence and conceptual understanding, then gradually introduce code-based libraries as students get ready for more power and complexity.

This tiered approach meets learners where they are, preventing them from feeling overwhelmed while giving them a clear path toward professional-level skills. For a more detailed look at structuring this journey, our guide on how to implement AI provides a framework that can be easily adapted for educational settings.

Bringing Projects to Life with Expert Insights

Connecting these hands-on projects to the real world is what makes the learning truly stick. This is the perfect time to bring in an expert from the field who can show students how these very tools are being used to solve massive challenges.

Hearing from a practitioner makes the projects feel less like an academic exercise and more like a direct line into a future career.

Imagine this: after your students build a simple image classifier, you bring in a speaker like Dr. Rana el Kaliouby, a pioneer in Emotion AI. She can explain how similar—though vastly more complex—technologies are used to help machines understand human emotions, with applications in mental health and automotive safety.

Or, after a project involving chatbots, a session with Adam Cheyer, one of the co-founders of Siri, would be incredible. He can share a firsthand account of the challenges and breakthroughs behind creating a globally recognized AI assistant, making the students' small projects feel connected to a much bigger story of innovation.

These speakers don't just teach; they inspire. They transform a technical lesson into a vision of what’s possible.

Embedding AI Ethics into Your Teaching

Teaching students how to build with AI is only half the job. The real challenge—and the more important lesson—is teaching them why they're building it and for whom. AI ethics can't just be a single lecture tacked on at the end of the semester. It needs to be woven into every module, every project, and every classroom discussion.

This isn't about getting lost in abstract philosophy. It's about grounding the conversation in the real-world consequences students can actually see and feel, equipping them with a framework for responsible innovation from day one.

Making Abstract Concepts Concrete

Let's be honest, "AI ethics" can sound pretty theoretical. The best way to make it stick is with compelling, real-world case studies where things went wrong.

Move past the hypotheticals and get into the weeds of actual AI failures. Talk about the documented cases of algorithmic bias in hiring tools that systematically filtered out qualified candidates based on their gender. Discuss the facial recognition systems that demonstrated higher error rates for women and people of color, sometimes leading to devastating wrongful accusations.

These examples make the stakes feel immediate and personal. "Algorithmic bias" stops being a buzzword and becomes a tangible problem with a human cost. This forces students to think critically about the data they use and the assumptions baked into the models they create.

Responsible AI education isn't just about preventing bad outcomes; it's about proactively designing systems that are fair, transparent, and genuinely beneficial. We need to shift the default question from "Can we build this?" to "Should we build this?"

Fostering Critical Discussion and Debate

Once students understand the real-world implications, it's time to challenge them with messy questions that have no easy answers. This is where you can really sharpen their critical thinking.

Spark some debate with provocative prompts that force them to consider multiple viewpoints.

- Accountability: Who’s at fault when a self-driving car causes an accident—the owner, the manufacturer, or the programmer who wrote the code?

- Justice: Should AI be used to help determine criminal sentencing? What are the risks of introducing potentially flawed algorithms into the legal system?

- Privacy: When does a personalized ad cross the line into invasive surveillance? Where do we draw the line on data collection?

These aren't just academic exercises. The rise of AI in education makes these conversations urgent. Recent research found that 64% of students use AI for tutoring and 49% use it for career advice. But it doesn't stop there. An alarming 43% are seeking relationship advice from AI, and 42% are turning to it for mental health support. As you can read more about the downsides of AI in schools, these trends raise serious ethical red flags around data privacy and the reliability of algorithmic guidance.

Amplifying the Message with Expert Voices

To truly drive these lessons home, bring in people who navigate these ethical minefields for a living. Hearing from a professional who grapples with the human impact of AI every day brings a level of authority and lived experience that no textbook can replicate.

Imagine the impact of a session with someone like Dr. Rumman Chowdhury, a pioneer in applied algorithmic ethics. She can offer a firsthand look at how massive organizations are trying to build more responsible AI and the very real challenges they face.

Similarly, a speaker like Nina Schick can provide a powerful macro view, discussing how generative AI is reshaping society and what kinds of ethical guardrails we need to put in place. These expert voices transform the ethics component of your curriculum from a box-ticking exercise into a source of inspiration, showing students what it truly means to be a responsible innovator.

Bring the Real World into Your Classroom with AI Experts

Nothing makes AI concepts click quite like hearing from the people who are actually building this stuff every day. Abstract lessons on machine learning or ethics suddenly get real when a pro shares stories from the trenches. A guest speaker can easily turn a theoretical discussion into a career-defining moment for your students.

When students connect with experts, it demystifies complex topics and shines a light on career paths they might never have considered. A talk from a leading AI ethicist like Dr. Rumman Chowdhury makes the consequences of algorithmic bias feel immediate and urgent. Hearing from a machine learning engineer like Cassie Kozyrkov reveals the creative, problem-solving that happens behind the code.

Finding the Right Speaker for Your Goals

The secret is matching the speaker's expertise to your curriculum's focus. If your students are wrestling with the societal impact of AI, a thought leader like Nina Schick can provide invaluable context on generative AI. If they're deep in a hands-on coding project, an engineer like Adam Cheyer can show them how those exact skills are used to build commercial products.

Think about the specific message you want to land:

- To demystify complex concepts: A speaker like Cassie Kozyrkov, Google's first Chief Decision Scientist, is brilliant at breaking down intimidating topics into intuitive, understandable ideas. A session with an expert like her can build the foundational confidence students need when they're just starting out. You can learn more about Cassie Kozyrkov's unique approach to decision intelligence to see how she makes AI accessible.

- For tough ethical discussions: To really dig into bias and fairness, an expert in responsible AI like Dr. Rumman Chowdhury can lead a powerful conversation. They can ground the theoretical debates in real-world case studies they've personally navigated, which is far more impactful than just reading about it.

- For career inspiration: Bringing in an innovator like Dr. Ayesha Khanna, who designs AI-powered future cities, gives students a direct look into the entrepreneurial side of AI. It shows them what it really takes to turn an idea into a functional, world-changing technology.

How to Structure an Engaging and Memorable Session

To get the most out of your guest speaker, a little prep goes a long way. Don't just set them up for a passive lecture; structure the session as a genuine, interactive conversation.

Before they arrive, give the speaker some context on what your students have been learning. Share a few of the key questions or challenges your class is wrestling with. This lets the expert tailor their insights, making the discussion far more relevant and powerful.

The goal of bringing in an expert isn't just to transfer information. It's to spark curiosity, challenge assumptions, and provide a human face to a field that can often feel abstract and impersonal.

Get your students to prepare their own questions in advance, too. This guarantees a lively Q&A that moves beyond surface-level topics and into what they really want to know. A well-planned expert session doesn’t just teach students about AI; it connects them directly to the heart of the industry.

Answering Your Top Questions About Teaching AI

When you start planning to teach AI, a handful of questions always seem to pop up. It's a fast-moving field, and it’s natural to wonder where to start, how to measure success, and how to keep up.

Let's walk through some of the most common questions we hear from educators. We'll give you straightforward, practical answers to help you build your program with confidence.

What Core Skills Do Students Need Before Learning AI?

It's a common misconception that students need advanced math and Python skills right out of the gate. While those are crucial for deep, technical AI work, the foundational concepts are surprisingly accessible.

For beginners, the real focus should be on building logical reasoning, data literacy, and creative problem-solving skills. Plenty of block-based coding platforms and visual tools can introduce core AI principles without a single line of code. This approach opens the door to a much wider and more diverse group of students.

How Can I Assess Student Learning Beyond Coding Assignments?

Looking beyond the code is where you'll find the most meaningful insights into a student's understanding. Purely technical tests miss the bigger picture.

Instead, try project-based evaluations. Ask students to identify a real-world problem and design an AI-powered solution for it. You can also review project portfolios, spark a debate on an ethical case study, or have them present a complex AI topic to a completely non-technical audience. These methods show you how well they grasp critical thinking, creativity, and communication—not just technical proficiency.

The most meaningful assessments in AI education often look beyond code. They evaluate a student's ability to think critically about the technology's impact, articulate complex ideas clearly, and apply their knowledge to solve genuine problems responsibly.

How Do I Keep My Curriculum Current in Such a Fast-Moving Field?

This is the big one, isn't it? The key is to anchor your curriculum in timeless principles, not temporary tools.

Focus on the foundational concepts that evolve slowly: machine learning models, the fundamental role of data, and ethical frameworks. These pillars will remain relevant no matter what new app is trending.

Then, you can layer on the current stuff. Here’s how:

- Integrate discussions about current events and new AI case studies as they happen.

- Bring in guest speakers from the industry to share up-to-the-minute insights.

- Encourage students to follow AI news and trends on their own and bring their findings to class.

This approach keeps your curriculum robust and relevant, giving students a solid foundation that outlasts any single technology.

Bringing the real world into your classroom is one of the most powerful ways to teach AI. Speak About AI connects you with leading experts who can demystify complex topics and inspire your students with firsthand knowledge from the front lines of innovation. Explore our roster of speakers and book an expert for your next class or event at https://speakabout.ai.