What is Edge Computing: A Practical Guide to Boosting Tech

At its core, edge computing is a strategy that brings data processing and storage physically closer to where your data is actually created and needed. Instead of sending everything to a centralized cloud for analysis, the goal is to cut down response times and save on bandwidth.

A Simple Analogy to Understand Edge Computing

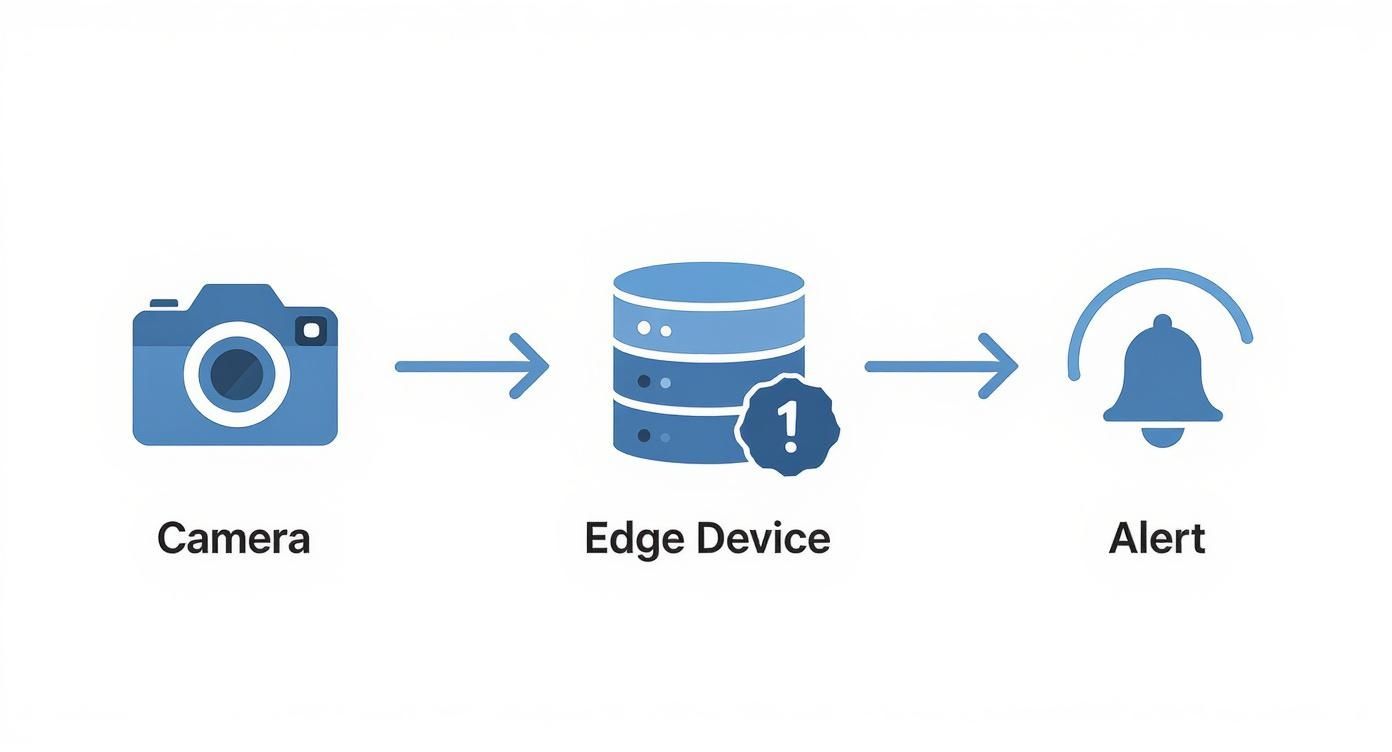

Think about a smart security camera in your home. With a traditional cloud setup, that camera detects motion and immediately starts streaming the entire video feed over the internet to a server hundreds of miles away. That server analyzes the video, decides if it's important, and then sends an alert back to your phone. The whole trip can introduce delays, especially with a spotty internet connection.

Now, let's apply the edge computing approach. The camera still detects motion, but instead of sending the raw video, a small, local processing unit—maybe built right into the camera or a nearby device—analyzes the footage on the spot. It has enough power to figure out if that motion was just a cat chasing a bug or an actual person at your door.

This local "edge" device then sends a tiny, highly relevant piece of data to you and the cloud: "Person detected at the front door." That's it. You've just seen the power of edge computing in action.

From Centralized to Local Power

This shift marks a major departure from the classic cloud model, which is built on massive, centralized data centers. As AI expert Bernard Marr notes, "We are in the midst of a historic moment when data, computing, and AI are coming together to create an intelligence explosion that will reshape every aspect of our lives." This decentralized approach is the framework making it all possible.

“Edge computing is about bringing the power of the cloud closer to where the data is actually being generated.” - Bernard Marr

This decentralized model isn't just a novelty; it's a necessity. With billions of IoT devices—from factory sensors to connected cars—generating staggering amounts of data, sending every last byte to the cloud is often slow, impractical, and incredibly expensive.

Why Edge Computing vs Cloud Computing Matters

To really get why this shift is happening, it’s helpful to see the two models side-by-side. While cloud computing is fantastic for heavy-duty processing and large-scale storage, edge computing excels where speed and local context are critical.

Here’s a quick breakdown of their key differences:

Edge Computing vs Cloud Computing At a Glance

| Characteristic | Edge Computing | Cloud Computing |

|---|---|---|

| Data Processing Location | Near the data source (e.g., on the device) | Centralized, remote data centers |

| Latency | Very low (milliseconds) | Higher (can be seconds) |

| Bandwidth Usage | Low, as only key data is sent | High, as raw data is often transferred |

| Best For | Real-time applications, IoT, AR/VR | Big data analytics, large-scale storage |

| Connectivity Reliance | Can operate offline or with poor connection | Requires a stable internet connection |

| Scalability | Distributed; scales with device additions | Highly centralized and scalable on demand |

Ultimately, edge and cloud aren't competitors—they're partners. Edge handles the immediate, time-sensitive tasks, while the cloud takes care of the long-term storage, complex analytics, and big-picture insights.

Solving the Latency Problem

The single biggest problem edge computing solves is latency—the delay you experience when data travels over a network. For a lot of modern tech, even a one-second delay is a deal-breaker.

Just think about these scenarios:

- Autonomous Vehicles: A self-driving car needs to process data from its sensors instantly to slam on the brakes. It simply can’t afford to wait for instructions from a server in another state.

- Industrial Automation: A robot on an assembly line has to react in milliseconds to a problem to prevent a costly shutdown or a dangerous accident.

- Augmented Reality (AR): For AR glasses to feel realistic, digital information has to be overlaid on the real world with no perceptible lag.

By putting the processing power right where the action is, edge computing delivers the speed and reliability these technologies demand. It's the foundational layer for a world that's becoming smarter, faster, and more connected every day.

How Edge Computing Actually Works

To really get what edge computing is, let’s follow a single piece of data on its journey. The trip starts right at the source—maybe a sensor on a factory floor, a smart traffic camera, or even a medical wearable on someone's wrist. In the old way of doing things, the raw data from that source would begin a long haul across the internet to a centralized cloud server.

Edge computing flips this script entirely. Instead of that long-distance trek, the data travels just a few feet to a nearby edge device. This could be a small but powerful computer called a gateway, a local server tucked away in a back room, or even the smart device itself. Think of it as a local command center, ready to process information the second it arrives.

The Local Decision-Making Process

Once that data hits the edge device, the real magic begins. This is where initial analysis, filtering, and even complex AI-driven decisions happen on the spot. It's a bit like a local news crew editing their raw footage and sending only the polished, final story to the national headquarters. This local processing is way more efficient than blasting terabytes of unfiltered video or sensor readings to the cloud.

This diagram breaks down that data flow, from creation to action.

As the visual shows, the edge device acts as an intelligent filter. It analyzes information and triggers a specific action without ever needing to phone home to the central cloud first.

This localized approach is the secret sauce for enabling the split-second responses modern applications demand. By handling tasks right where they happen, the architecture nails several critical goals:

- Reduces Internet Bandwidth: Only essential summaries or alerts get sent to the cloud. This dramatically cuts down on data traffic and the costs that come with it.

- Frees Up the Cloud: The central cloud is saved for the big-picture stuff—long-term data storage, massive-scale analytics, and training machine learning models.

- Enables Real-Time Action: Decisions are made in milliseconds. This isn't just nice to have; it's absolutely necessary for things like autonomous vehicles or industrial safety systems.

Empowering the Edge with AI

This on-the-spot analysis is often powered by specialized AI models running directly on the edge device. As leading AI strategist Tom Allen explains, deploying AI at the edge is crucial for responsive, intelligent systems. Getting these models to work right requires careful planning, a topic you can explore in our guide on how to implement AI.

Ultimately, this distributed architecture creates a system that's more responsive, resilient, and efficient. It’s not about replacing the cloud, but rather augmenting it. You end up with a powerful partnership where each part of the system handles the tasks it’s best suited for. This collaborative model is the core of how edge computing actually works.

The Key Benefits of Processing Data Locally

The rush toward edge computing isn't just hype—it's fueled by some seriously powerful advantages. When you process data right where it's created, you fundamentally change how your applications work, unlocking real-world gains in speed, security, reliability, and cost.

This shift is creating some major market momentum. The U.S. edge computing market is on track to hit around USD 7.2 billion by 2025 and is expected to jump to a staggering USD 46.2 billion by 2033. This explosion is happening because sectors like manufacturing and healthcare need real-time processing, and for them, delays just aren't an option. You can dig into more insights on this growth over at imarcgroup.com.

Near-Instantaneous Speed and Low Latency

The most obvious win with edge computing is the massive drop in latency. Think about it: when data has to travel hundreds of miles to a cloud server and back, even a tiny delay can be a big problem. Edge processing gets rid of that long round trip.

For a self-driving car, that’s the difference between spotting a pedestrian instantly and a catastrophic accident. For a surgeon using a robotic arm for a remote procedure, a connection with zero lag is non-negotiable for making precise, life-saving movements. Edge computing is what makes that level of responsiveness a reality.

Enhanced Security and Data Privacy

Keeping your sensitive information on-site is a huge security win. As data privacy expert Sheila FitzPatrick often emphasizes, when data from a hospital's patient monitor or a bank’s security camera gets processed locally, it never has to travel across the public internet. This drastically shrinks its exposure to potential attacks.

By minimizing the amount of data you send to the cloud, you shrink the potential attack surface. This localized approach is a cornerstone of modern security, especially in industries governed by strict compliance regulations like healthcare and finance.

This method lines up perfectly with strong security protocols. Protecting information right at the source is a critical step, and you can explore more strategies in our guide to data privacy best practices.

Greater Reliability and Uptime

What happens to your smart factory when the internet goes out? If it’s totally cloud-dependent, everything grinds to a halt. Edge systems, on the other hand, are built to be resilient.

Because they can process data and operate on their own, edge devices make sure critical operations keep humming along, even during a network outage. An automated assembly line can continue its work, and a retail store's point-of-sale system can keep ringing up sales offline.

Significant Cost Savings

Finally, edge computing can lead to some serious cost reductions. Continuously streaming raw data from thousands of IoT sensors to the cloud eats up a massive amount of bandwidth and runs up expensive storage bills.

By processing data locally, companies only need to send the important stuff—key insights or summaries—to the cloud. This simple change can slash bandwidth costs and cut down on the need for massive cloud storage, freeing up budget for other initiatives.

Real-World Examples of Edge Computing in Action

Theory is one thing, but seeing edge computing at work in the real world is where its power really hits home. This isn't just a futuristic concept; it’s a technology that is actively reshaping industries right now, enabling smarter, faster, and more reliable operations right where the action happens.

From buzzing factory floors to the cars on our streets, edge computing is the invisible force behind a new wave of intelligent applications. Let’s look at a few concrete examples of how it’s being put to work today.

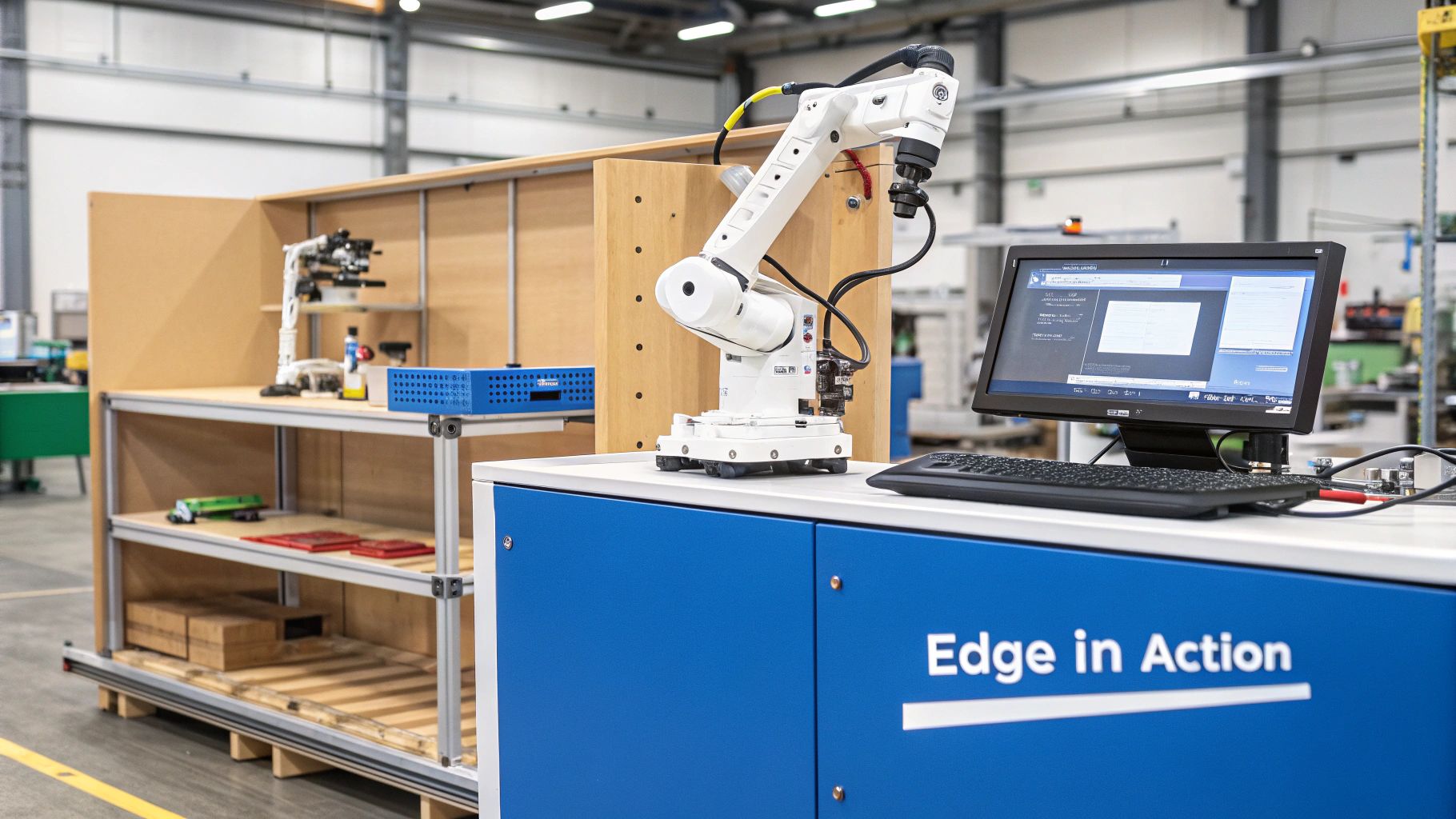

Smart Factories and Predictive Maintenance

In a modern manufacturing plant, unexpected downtime is the ultimate enemy. Smart factories use edge computing to see equipment failures coming before they ever bring the line to a halt.

It works like this: sensors on critical machinery constantly track things like vibration, temperature, and power usage. Instead of flooding the cloud with this data, an on-site edge device processes it right there in the factory. If it detects a subtle change in vibration that signals a motor is about to fail, it instantly triggers an alert for the maintenance crew.

This proactive approach is called predictive maintenance, and it’s a game-changer. It prevents expensive shutdowns, reduces repair costs, and makes equipment last longer.

Autonomous Vehicles and Drones

Self-driving cars are probably one of the most powerful examples of edge computing in action. Think about it: these vehicles generate a mind-boggling amount of data—sometimes terabytes a day—from their cameras, LiDAR, and countless other sensors.

For a car to make a split-second decision, like slamming on the brakes to avoid a pedestrian, it simply can’t wait for a round trip to the cloud. The delay, even if it’s just milliseconds, is too long. All of that critical thinking must happen inside the vehicle itself. Edge computing provides the intense, localized processing power needed to make sense of all that sensor data and react instantly.

This is exactly how advanced drone obstacle avoidance systems work, too. Just like a self-driving car, these drones process information on board to make immediate flight corrections, perfectly illustrating edge processing in a high-stakes environment.

Intelligent Retail Experiences

Retailers are getting creative with edge computing to build smarter stores without compromising customer privacy. By deploying smart cameras and sensors, they can analyze what’s happening in the store locally, without ever sending sensitive video footage to a central server.

By processing shopper analytics and inventory data on-site, retailers can gain immediate insights. This allows them to optimize store layouts, manage stock levels in real time, and reduce wait times at checkout, all while keeping personally identifiable information secure within the store.

This local approach means customer data stays private, building trust while simultaneously improving the entire shopping experience.

Connected Healthcare and Wearable Tech

When it comes to healthcare, speed and reliability can literally be a matter of life and death. Edge computing is becoming a critical piece of the puzzle for patient monitoring and rapid response.

Take wearable health monitors—devices that track things like heart rate or glucose levels. They can use edge processing to analyze a patient’s vitals right on the device. If the gadget detects a dangerous anomaly, like an irregular heartbeat, it can fire off an immediate, high-priority alert to doctors or paramedics.

By eliminating network latency from the equation, help gets dispatched faster, turning a potential crisis into a managed event.

The Future of Edge Computing and Emerging Trends

Looking ahead, edge computing is on track to become even more essential. We're seeing powerful trends push intelligence further and further away from big, centralized data centers. This isn't just a minor update; it’s a fundamental rethinking of how we build our digital infrastructure. We're moving from a cloud-first model to an intelligent, distributed network where the action happens right at the source.

Driving this evolution are two key partnerships: 5G networks and artificial intelligence. They are fueling explosive growth.

Market data from Spherical Insights & Consulting predicts the global edge computing market will rocket from USD 19.38 billion in 2024 to an incredible USD 1.52 trillion by 2035. That’s an annual growth rate of nearly 48.7%, a surge tied directly to the explosion of IoT devices and the growing demand for real-time processing.

The Power Couple: 5G and Edge

The rollout of 5G is like pouring gasoline on the edge computing fire. Think of 5G as the super-fast, ultra-reliable highway that edge devices need to communicate. Its main selling points—blazing speed and almost zero latency—are the perfect match for local processing.

This powerful combination opens the door to capabilities that felt like science fiction just a few years ago. For instance, 5G and edge can deliver:

- Immersive Augmented Reality (AR): Sending complex, high-fidelity graphics to smart glasses with no noticeable lag.

- Connected Vehicles: Letting cars talk to each other and with smart city infrastructure in real time to prevent accidents before they happen.

- Telesurgery: Giving surgeons instant, haptic feedback while they operate on patients from thousands of miles away.

The Rise of Edge AI

The other major trend is the fusion of edge computing with artificial intelligence, often called Edge AI or "TinyML." This means running sophisticated AI models directly on local devices instead of sending data to the cloud for analysis.

Imagine a smart camera performing complex image recognition all on its own, or a factory robot adapting its movements without waiting for instructions from a central server. That's Edge AI in action.

Leading experts are championing this shift. As digital transformation expert Haydn Shaughnessy highlights, moving intelligence to the edge is crucial for creating adaptive, scalable AI systems. This decentralized approach makes devices smarter, more autonomous, and more private. Navigating this evolution requires a solid game plan, and our insights on digital transformation consulting services can help organizations chart the course.

By running AI models directly on devices, we are not just making them faster; we are making them more resilient, secure, and context-aware. Edge AI is the key to unlocking the true potential of a world filled with trillions of connected sensors and devices.

Ultimately, these trends make one thing clear: edge computing is not a fad. It’s the next logical step in our digital infrastructure—a necessary evolution to support a future where we expect instant, intelligent, and reliable computation everywhere.

Common Questions About Edge Computing

As edge computing moves from a niche concept to a core piece of our digital world, it’s natural to have questions. Getting a handle on what it is, what it isn’t, and how it fits with other technologies is key. Let’s clear up some of the most common questions people ask.

Does Edge Computing Replace the Cloud?

This is probably the biggest misconception out there. The short answer is no. Edge computing isn't here to kill the cloud; it’s here to make it better. They’re partners, each playing to their strengths.

The cloud is still the undisputed champ for the heavy lifting—storing massive datasets, running complex analytics, and training enormous AI models. Nothing beats it for centralized power and scale. The edge, on the other hand, is all about speed and immediate action right where the data is created.

Think of it this way: the edge is like a medic on the front lines, making critical, split-second decisions on the spot. The cloud is the advanced hospital where deep analysis, long-term strategy, and major operations happen. They work together to create a smarter, faster system.

This tag-team approach gives you the best of both worlds: instant local processing from the edge and powerful, big-picture intelligence from the cloud.

What Exactly Is an Edge Device?

"Edge device" isn’t a single type of gadget. It’s a catch-all term for any piece of hardware that brings computing power closer to the source of data. These devices come in all shapes and sizes, built for thousands of different jobs.

Here are a few examples to paint a picture:

- Simple Gateways: These are small, smart routers that gather data from dozens of sensors, filter out the noise, and only send the important stuff onward.

- Industrial PCs: Imagine rugged, heavy-duty computers bolted to a factory floor, analyzing vibrations from a machine to predict a failure before it happens.

- On-Premise Servers: Think of a powerful server tucked away in the back room of a retail store or hospital, processing sensitive customer or patient data locally for privacy and speed.

- Smart Cameras: Modern security cameras are often packed with processing chips that can identify a person, a vehicle, or a misplaced package all on their own, without sending video to the cloud.

How Does Edge Relate to the Internet of Things?

Edge computing and the Internet of Things (IoT) are practically inseparable. IoT is the vast, sprawling network of connected devices—smart thermostats, agricultural sensors, wearable health monitors—that are constantly churning out data.

Trying to funnel every last byte from billions of IoT devices to a central cloud would be a nightmare. The network would collapse, and the costs would be astronomical.

This is where edge computing becomes the hero of the story. It provides the local brainpower needed to process that firehose of IoT data right at the source. It’s what allows a smart traffic light to react to real-time conditions or a factory sensor to instantly shut down a malfunctioning assembly line. Edge makes IoT practical.

What Are the Biggest Implementation Challenges?

For all its benefits, rolling out an edge strategy isn't exactly a walk in the park. The biggest headaches usually come from managing and securing a system that's spread out all over the place.

Unlike a neat, centralized cloud data center, an edge network might have thousands of devices scattered across different cities or even continents. Every single one needs to be updated, monitored, and protected. This creates a huge new surface for potential security threats—each device is a possible backdoor for an attacker.

Organizations have to get serious about robust security protocols and find sophisticated tools to manage their sprawling edge infrastructure. This complexity is a big reason why the global market is expected to explode, projected to shoot past USD 547 billion by 2035 as companies hunt for specialized solutions. (Learn more about these global market projections).

At Speak About AI, we connect you with leading experts who are defining the future of edge computing and AI. Find the perfect speaker to bring deep insights and real-world expertise to your next event by visiting Speak About AI.