What is Explainable AI: A Practical Guide for Leaders

Explainable AI (XAI) is a set of tools and methods that allow us to understand why a machine learning model makes the decisions it does. It's about peeling back the layers of complex algorithms to answer a simple, critical question: "Why did the AI do that?"

Instead of treating AI like an opaque crystal ball, XAI helps turn it into a transparent and trustworthy partner. As AI's decisions carry more weight in our daily lives, this shift isn't just nice to have—it's essential for responsible innovation.

Why We Need AI We Can Actually Understand

Imagine an AI denies someone a critical home loan, flags a medical scan for a life-threatening illness, or boots a promising candidate from a hiring pool. If you ask it for a reason, all you get is silence. This is the infamous "black box" problem—where an algorithm spits out a result without showing any of its work.

For years, the main goal was just getting the most accurate result possible. But today, that opacity is a massive risk for any organization.

As AI gets woven into high-stakes fields like healthcare and finance, leaders are feeling the pressure. Regulators, customers, and even internal teams are demanding more than just correct answers; they want clarity and accountability. This growing need for transparency is what’s pushing explainable AI to the forefront.

The Shift from Accuracy to Accountability

The conversation around AI is growing up. Performance still matters, of course, but companies are realizing that a decision they can't explain is a decision they can't truly stand behind.

This change is being driven by a few key factors:

- Regulatory Scrutiny: New laws are popping up globally, like the EU's landmark AI Act, that are starting to require a "right to explanation" for automated decisions. Transparency is quickly becoming a legal requirement.

- Customer Trust: People are naturally skeptical of systems that make important choices about their lives without any justification. To build and keep that trust, businesses have to be able to explain themselves clearly.

- Operational Integrity: Your own teams—from data scientists to developers—need to understand how a model works. It's the only way to debug it, find hidden biases, and make sure it's actually doing what you built it to do.

The core challenge is simple: a high-performing AI model that users don’t understand is not truly useful. Transparency, interpretability, and trust are just as critical as accuracy, if not more so.

What Is Explainable AI at a Glance

To put it simply, XAI is the solution to the "black box" problem that plagues so many powerful AI systems. It provides the methods to translate algorithmic logic into human-understandable terms.

Here's a quick breakdown:

| The Problem (Black Box AI) | The Solution (Explainable AI) |

|---|---|

| Decisions are made, but the "why" is unknown. | Provides clear reasons for each specific outcome. |

| Hidden biases can go undetected and cause harm. | Helps identify and correct unfair or skewed logic. |

| Debugging and improving the model is difficult. | Pinpoints where the model is succeeding or failing. |

| It’s hard to build trust with users and regulators. | Fosters accountability and makes compliance possible. |

The Problem (Black Box AI) | The Solution (Explainable AI)

| Decisions are made, but the "why" is unknown. | Provides clear reasons for each specific outcome. |

|---|---|

| Hidden biases can go undetected and cause harm. | Helps identify and correct unfair or skewed logic. |

| Debugging and improving the model is difficult. | Pinpoints where the model is succeeding or failing. |

| It’s hard to build trust with users and regulators. | Fosters accountability and makes compliance possible. |

Ultimately, XAI is about building a bridge between a machine’s calculation and our human need for understanding.

Framing the Solution

So, what is explainable AI in practice? It’s not a single button you press. It's a collection of techniques and a mindset focused on making AI’s thought process visible. As our roster of AI experts and keynote speakers consistently advise, it's a strategic necessity for any organization deploying AI responsibly.

These leading voices see explainability as the critical link between powerful technology and reliable, real-world results. By embracing XAI, organizations can move from just using AI to confidently partnering with it. This foundation of understanding is what separates a successful AI strategy from a costly and embarrassing failure.

The Business Case for AI Transparency

Let's move past the abstract ideas—explainable AI connects directly to your bottom line. This isn't just a trendy technical feature; it's a strategic pillar for building a more resilient, trustworthy, and competitive business.

The global market for explainable AI shows just how urgent this shift is. It's already valued at around USD 7.79 billion, but it’s projected to explode to USD 21.06 billion by 2030. This growth isn't just hype. It's being pushed by serious regulatory pressure and a growing demand for accountability in critical industries.

Build Unbreakable Customer Trust

Trust is the currency of modern business, and opaque "black box" AI systems spend it recklessly. When a customer is denied a loan or gets a weird recommendation from an algorithm they can't understand, their confidence disappears. The decision feels arbitrary and unfair because there's no reason behind it.

Explainable AI completely flips this script. By offering clear, human-friendly justifications for its actions, AI becomes a reliable partner instead of an inscrutable black box. This level of transparency is essential for keeping customers loyal and protecting your brand's reputation.

When you can explain why an AI made a decision, you can stand by that decision. This simple act of accountability is the bedrock of lasting stakeholder and customer trust.

The AI governance speakers on our roster constantly drive this point home: trust isn't a nice-to-have byproduct of good tech; it's a prerequisite. They work with organizations to build frameworks where every automated decision can be explained, defended, and understood.

Navigate the Complex Web of Compliance

The regulatory environment for AI is tightening fast. Governments worldwide are shifting from suggestions to strict enforcement, creating hard legal requirements for algorithmic accountability. Regulations like the GDPR's "right to explanation" and the EU's comprehensive AI Act are just the start.

In high-stakes industries like finance and healthcare, compliance is non-negotiable.

- Financial Services: Regulators are cracking down, demanding that banks provide clear reasons for credit decisions to fight discriminatory practices. XAI delivers the audit trail needed to prove fairness.

- Healthcare: When an AI model suggests a diagnosis, doctors need to understand its reasoning. It’s the only way they can validate the recommendation and take professional responsibility for the patient's care.

- Human Resources: Explainability helps prove that hiring algorithms aren't just continuing old biases, which protects companies from major legal and reputational damage.

This is all closely tied to the broader field of Responsible AI. To meet these demands, smart organizations are putting a formal Responsible AI Policy in place to guide how they build and use AI systems.

Sharpen Your Competitive Edge

Transparency isn’t just about playing defense and managing risk—it's about speeding up innovation. When your technical teams can actually look inside the AI model, they gain incredible new capabilities that create a real competitive advantage.

An explainable system helps data scientists and engineers move faster and build better products. They can debug models more easily when things go wrong, spot and remove hidden biases that corrupt the results, and confirm that the AI is actually focusing on the right data points.

This internal clarity empowers your teams to fine-tune algorithms with confidence. The result is more robust, accurate, and reliable AI solutions that give you an edge. Ultimately, explainable AI turns a potential liability into a powerful asset that drives sustainable growth.

How to Open the AI Black Box

To really get what explainable AI is, you have to look under the hood. Peeking inside the "black box" isn't about becoming a data scientist overnight. It's about learning the language of transparency so you can have better conversations with your tech teams and make smarter decisions for the business.

Let’s pull back the curtain on the core techniques that make AI understandable, using simple analogies instead of dense, academic jargon.

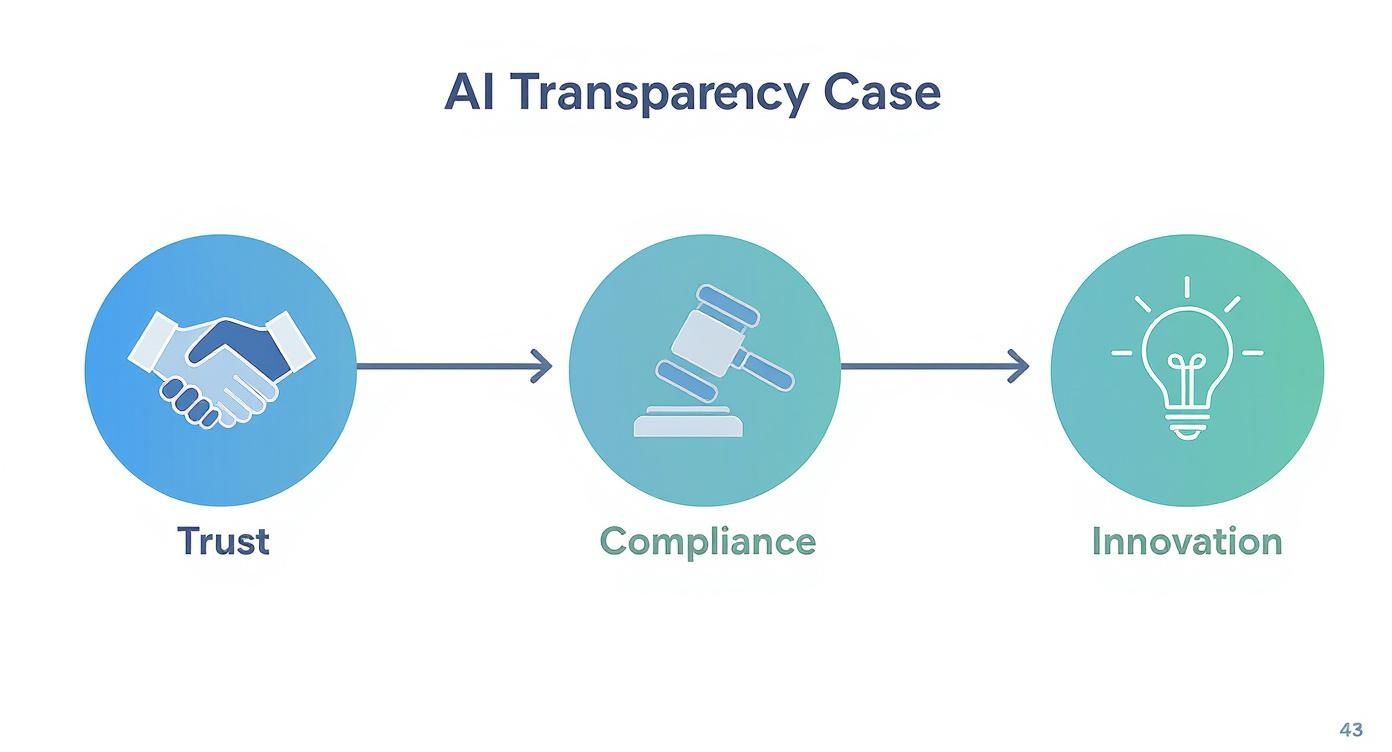

This infographic lays out the business case for AI transparency, showing exactly why opening the black box is a strategic must-do, not a nice-to-have.

As you can see, building trust with customers, nailing compliance, and driving real innovation are all connected. They’re the three legs of the stool supporting a responsible and successful AI strategy.

Model-Agnostic vs Model-Specific Techniques

The first thing to get your head around is the two main categories of XAI tools. Think of it like a mechanic's toolkit.

- Model-Agnostic Tools: These are your all-purpose wrenches. They’re designed to work on pretty much any AI model, no matter how it was built. That flexibility makes them incredibly popular.

- Model-Specific Tools: These are the custom-built instruments for a particular make and model. They only work for one type of algorithm—like a decision tree or a neural network—but they can give you a much more precise and detailed explanation for that specific system.

For most leaders, the model-agnostic tools are the ones to know, since they provide a universal way to ask for and receive explanations. Two of the most common methods you'll hear about are LIME and SHAP.

LIME: Asking "Why?" for a Single Moment

LIME (Local Interpretable Model-Agnostic Explanations) is one of the most intuitive XAI techniques out there. Its main job is to explain a single prediction that a complex model just made.

Imagine an AI model denies a credit card application. Using LIME is like asking the AI, "Why did you reject this specific person's application right now?"

LIME answers by creating a simpler, temporary model that mimics the black box's logic right around that one decision point. It then flags the few factors that were most important. For the credit application, it might show that a recent late payment and a high debt-to-income ratio were the top two reasons for denial. It gives you a focused, local explanation that’s easy to digest.

SHAP: Calculating Each Player's Contribution

SHAP (SHapley Additive exPlanations) gives you a much fuller and more mathematically sound explanation. It doesn’t just look at one decision in isolation; it calculates the precise contribution of every single factor to the final result.

Think of an AI model predicting a sports team will win a game. SHAP is like a sophisticated analyst who calculates exactly how much the star quarterback, the solid defense, and even the kicker each contributed to that win prediction.

SHAP values assign a specific weight to each input, showing whether it pushed the decision higher or lower. This provides a holistic view of the model's logic, making it a powerful tool for understanding both individual predictions and the model's overall behavior.

The speakers we work with, who advise Fortune 500 companies, often point to SHAP for its consistency and its ability to paint a complete picture. This level of detail is crucial for high-stakes situations where every single factor has to be accounted for. For those looking to get started, our guide on [how to implement AI](https://speakabout.ai/blog/how-to-implement-ai) offers practical steps for integrating these principles.

A Quick Comparison of Common XAI Techniques

To pull this all together, here’s a quick rundown of how these methods stack up against each other. Understanding their sweet spots and their blind spots helps you see which tool is right for which job.

| Technique | How It Works (Analogy) | Best For | Limitation |

|---|---|---|---|

| LIME | Asking, "Why this specific decision right now?" | Quickly explaining individual predictions for non-technical users. | Explanations can be unstable and only reflect the model's logic for one instance. |

| SHAP | Calculating each player's exact contribution to the final score. | Deeply understanding feature importance for both individual and global explanations. | Can be computationally slow and expensive to run on very large datasets. |

| Rule-Based | A simple flowchart of "if-then" statements. | Industries where decisions must follow clear, auditable rules (e.g., compliance). | Often less accurate than complex models and cannot capture subtle patterns. |

| Attention | Highlighting the words in a sentence an AI focused on most. | Explaining outputs from language models, like translation or text summarization. | Primarily designed for specific types of neural networks (e.g., Transformers). |

Ultimately, choosing the right technique depends entirely on the question you're trying to answer. Are you troubleshooting a single weird result, or are you trying to validate the entire model's fairness? The answer will point you toward the right tool in the box.

Explainable AI in the Real World

This is where the theory behind what is explainable AI collides with reality. Across some of the world's most critical industries, XAI isn't just an academic idea anymore. It's a hands-on tool that delivers real results by making automated decisions clear, fair, and trustworthy.

The speakers on our roster are living this shift firsthand, helping companies navigate the tricky process of implementing XAI where the stakes are highest. Their on-the-ground experience proves that transparency isn't just a feature; it's a fundamental requirement for doing business today.

Revolutionizing Healthcare Diagnostics

In healthcare, there’s no room for error. A doctor has to be able to trust an AI's recommendation before they act on it. XAI builds that trust by showing the AI's work.

Imagine an AI model analyzing a medical scan and flagging a potential tumor. XAI techniques can instantly generate a "heat map," highlighting the exact pixels in the image that led to its conclusion. This allows a radiologist to immediately see what the AI saw, combining its powerful analysis with their own expert clinical judgment. It’s a game-changer.

Healthcare is leading the charge in adopting explainable AI, driven by tough regulations and the absolute need for clinical transparency. The sector already accounts for a massive slice of the XAI market and is projected to grow at a blistering pace through 2030. This growth is fueled by new rules, like the FDA’s guidance for AI-driven medical devices, which now demand algorithmic clarity.

Fostering Trust in Finance

The entire financial world is built on trust and iron-clad regulations. When a bank uses an AI to approve or deny a loan, it can't just say "no" without giving a reason. Rules like the Equal Credit Opportunity Act (ECOA) require specific, understandable explanations for why someone was turned down.

XAI provides the toolkit to do just that. Instead of a vague rejection letter, an explainable model allows the bank to say, "Your application was declined mainly because of a high debt-to-income ratio and a few recent late payments." This doesn't just check a compliance box—it builds customer trust by being transparent.

By providing clear, compliant reasons for credit decisions, financial institutions can avoid accusations of bias, strengthen customer relationships, and build a more accountable operational framework.

This clarity is just as vital in areas like fraud detection and anti-money laundering (AML), where analysts need to justify to regulators precisely why a certain transaction was flagged as suspicious.

Ensuring Fairness in Human Resources

Hiring is another area where a biased AI can do real damage. An algorithm trained on old hiring data might accidentally learn to favor candidates from specific backgrounds, unfairly sidelining everyone else. Explainable AI is one of the best defenses we have for promoting fairness in recruitment.

When an AI system recommends a candidate, XAI can pull back the curtain on its decision-making process.

- Did it prioritize relevant skills and years of experience?

- Or did it give too much weight to things that don’t matter, like the name of their university or their zip code?

By making the AI’s logic visible, HR teams can confirm that the system aligns with the company’s diversity and inclusion goals. It helps them prove that hiring decisions are based on fair, job-relevant criteria, which protects the company from legal risk and helps build a more talented workforce. Seeing the many ways AI is deployed, like in [how AI is used in advertising](https://project-aeon.com/blogs/how-is-ai-used-in-advertising-the-essential-playbook-for-modern-marketing), really highlights why explainability is so crucial in these high-impact applications.

These are just a handful of examples, but the core idea applies everywhere. Whether it's a retailer personalizing recommendations or a factory optimizing its supply chain, explainability transforms AI from an intimidating black box into a dependable partner. To see more, check out our guide on [AI use cases by industry](https://speakabout.ai/blog/ai-use-cases-by-industry).

Navigating the Challenges of XAI

Adopting explainable AI is a great goal, but the road to true transparency has its share of bumps and potholes. While the benefits are obvious, understanding the practical hurdles helps set realistic expectations and build a smarter XAI strategy. Achieving clarity isn’t as simple as flipping a switch; it means wrestling with some well-known trade-offs.

The most famous of these is the accuracy-explainability trade-off. It’s a classic dilemma: the AI models that are easiest to understand—like a simple decision tree—are often less powerful than their complex, “black box” cousins. A deep neural network might deliver incredible performance, but its tangled inner workings make it nearly impossible to interpret.

This creates a constant tension. Do you prioritize raw predictive power or a clear, simple explanation? For high-stakes decisions in healthcare or finance, a slightly less accurate but fully transparent model might be the only responsible choice.

The Risk of Misleading Interpretations

Even with the best XAI tools, an explanation isn't always a perfect reflection of reality. The explanations themselves can sometimes be misleading or oversimplified, creating a dangerous false sense of security.

For instance, a technique like LIME offers a local explanation for one specific decision. That's useful, but it doesn't guarantee the model behaves the same way everywhere else. An algorithm might look fair in one instance but hide deep-seated biases that only pop up under different conditions.

An explanation is only as good as its ability to be understood by its intended audience. A technically perfect but incomprehensible explanation fails to achieve the primary goal of XAI—to build trust and enable informed decision-making.

This is exactly why the human factor is so critical. The real goal is to generate insights that are genuinely useful to the people who need them, whether that’s a data scientist debugging a model or an executive answering to the board. A core part of strong [AI governance best practices](https://speakabout.ai/blog/ai-governance-best-practices) is making sure these explanations are validated for both clarity and accuracy.

The Hidden Costs and Technical Hurdles

Beyond the conceptual roadblocks, implementing XAI brings real technical and financial costs. Running sophisticated explanation methods like SHAP can be computationally brutal, demanding serious processing power and time, especially with large datasets.

These operational demands can slow down development cycles and drive up infrastructure expenses. For any organization working at scale, the cost of generating real-time explanations for every single AI decision can quickly become a major barrier.

Finally, there’s a big skills gap. We have a shortage of data scientists and engineers who are experts at both building advanced AI models and implementing XAI frameworks effectively. Just finding the right people to navigate these challenges is a hurdle many organizations face on their journey toward AI transparency.

Bring AI Transparency to Your Next Event

Understanding what is explainable AI has moved beyond the data science lab. It's now a fundamental part of modern leadership. As we've seen, the principles of transparency and accountability are non-negotiable for any organization serious about building an effective and responsible AI strategy. The big question is how to move from theory to action.

The most powerful way to do that is to bring this expertise directly to your team. Our roster of expert speakers cuts through the noise, demystifies the jargon, and hands your people a clear playbook. This is how abstract concepts become tangible business advantages and how you can start leading the conversation on trustworthy AI.

Expert Voices on Explainable AI

We represent the world’s leading minds on AI ethics, governance, and transparency. These aren’t just academics; they’re industry veterans who have been in the trenches, building and guiding AI systems at the highest levels. They bring hard-won, practical insights that connect with everyone from the C-suite to the engineering floor.

Each speaker brings a unique angle to making AI accountable:

- For Leadership Audiences: A session like From Black Box to Boardroom: Making AI Accountable is perfect for translating dense technical challenges into clear strategic imperatives that executives can act on.

- For Technical Teams: A hands-on workshop can dive deep into specific XAI techniques, giving your data scientists and engineers the practical guidance they need to build transparent systems from the ground up.

- For All Stakeholders: A talk on The Leader's Playbook for Trustworthy AI can rally your entire organization around a shared vision for ethical innovation and responsible growth.

Hiring an expert speaker is the definitive next step to ensure your team not only understands the principles of explainable AI but is also prepared to implement them effectively.

When you host one of our speakers, you give your team a direct line to the forefront of AI thought leadership. They’ll walk away with the confidence and clarity required to tackle the challenges of AI transparency head-on and drive your organization forward responsibly.

Ready to make AI transparency a priority at your next conference, workshop, or leadership summit? Explore our roster and find the expert who can transform your team’s understanding of this critical field.

Answering the Tough Questions About XAI

As leaders and event planners start digging into artificial intelligence, a few key questions about XAI always surface. Getting these answers right is the first step toward moving from a vague awareness of what is explainable AI to a practical, working knowledge. Let's tackle the most common ones we hear.

Our roster of AI speakers breaks these concepts down all the time, helping entire organizations build a shared language around AI transparency. Their real goal is to cut through the jargon and give teams the confidence to make smart, informed decisions.

What Is the Difference Between Explainable AI and Interpretable AI?

People often use these terms interchangeably, but there's a small yet crucial difference that really clarifies how we get to transparency.

- Interpretable AI refers to models that are simple enough to understand just by looking at them. Think of a basic decision tree or a linear regression model—their logic is so straightforward that a person can trace the path from input to output. They are "white box" models by design.

- Explainable AI (XAI) is a much wider umbrella. It’s the collection of techniques we use to crack open complex, "black box" models after they've already been built. Tools like LIME and SHAP are the classic examples here; they’re designed specifically to translate the inner workings of complicated algorithms into insights a human can actually use.

So, in short: interpretability is about building simple, transparent models from the start. Explainability is about making the complex ones understandable after the fact.

Is Explainable AI Required by Law?

The legal ground is shifting under our feet, and what was once a "nice-to-have" is quickly becoming a legal necessity, especially in high-stakes fields.

Regulations like the EU's GDPR, with its "right to explanation," and the sweeping AI Act are setting a new global bar for transparency. These rules create real legal duties for companies, particularly when AI makes decisions that seriously affect people's lives, like in credit scoring or medical diagnostics.

While it’s not yet a universal mandate for every single AI app, the direction of travel is clear. Regulators are demanding that organizations justify their automated decisions, and that pressure is only going to increase in critical sectors like finance and healthcare.

Can Any AI Model Be Made Explainable?

For the most part, yes. Thanks to the rise of model-agnostic XAI techniques, it's now possible to wrap an explanatory layer around almost any algorithm you can think of.

Tools like LIME and SHAP are so powerful because they don’t need to know the model's internal architecture; they just poke and prod it from the outside to figure out how it thinks. This means even the gnarliest deep learning systems can be put under the microscope.

But—and this is a big but—the quality of the explanation can vary wildly depending on the model’s complexity and the specific situation.

The real challenge isn't just producing an explanation. It's producing one that is both true to the model's logic and genuinely useful to a human. That's the final, crucial step in closing the gap between machine intelligence and human understanding.

Ready to bring this level of clarity to your organization? Speak About AI connects you with leading experts who can demystify these topics for your audience. Book a top-tier AI speaker for your next event by visiting us at https://speakabout.ai.